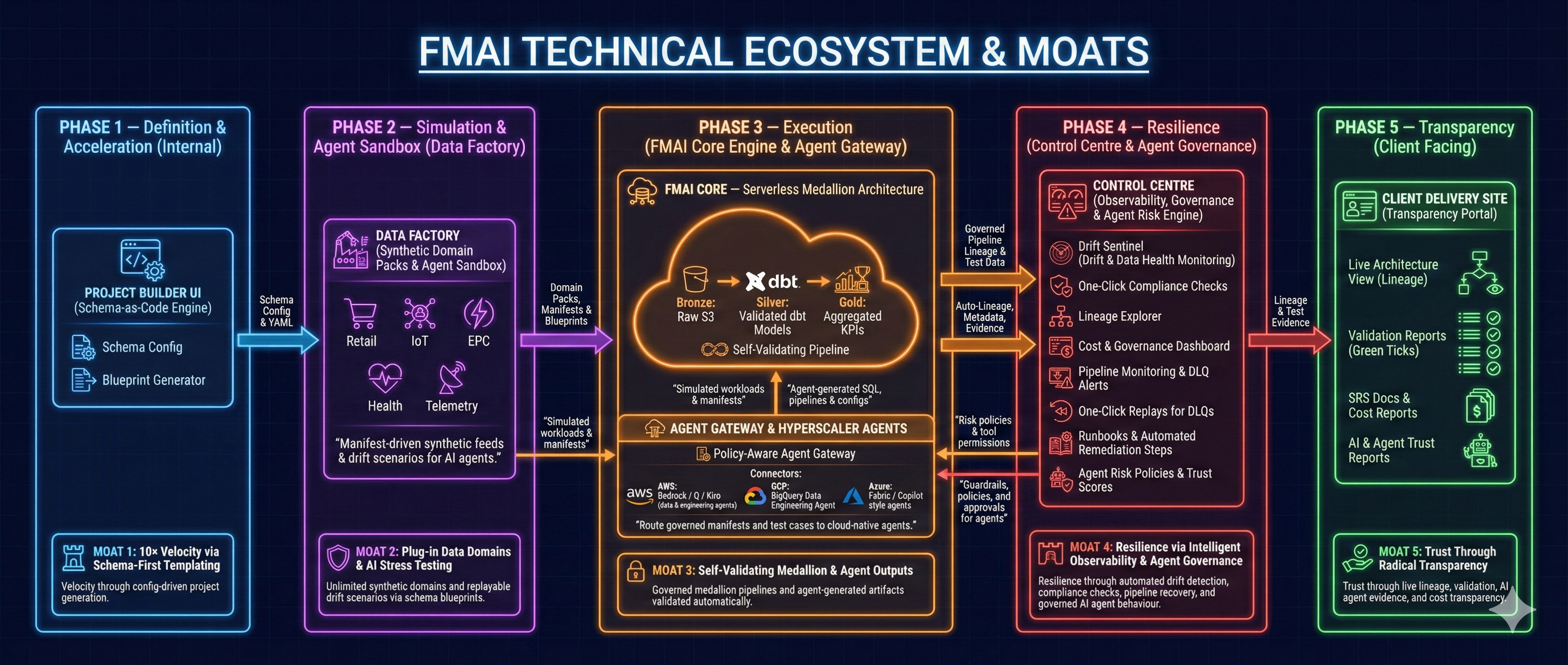

Fast Medallion AI (FMAI) v2.0

FMAI is a Governance & Simulation Layer for Hyperscaler Agents.

While Hyperscaler Agents (like AWS Bedrock or Google Vertex AI) can generate code and pipelines, they lack inherent context, safety, and business alignment. FMAI provides the "Guardrails & Proving Ground" required to deploy agent-generated data solutions with confidence.

It connects Operations Teams with AI Agents through a control plane that enforces: 1. Simulation: Every agent output is sandboxed and tested against synthetic data before deployment. 2. Governance: Full audit trails of prompts, tokens, PII redaction, and costs. 3. Trust: Cryptographically signed "Trust Reports" that prove a solution works.

5-Layer Governance Model

FMAI implements a verified chain of custody for AI-generated assets:

1. The Manifest Gate

Every data product starts with a Manifest—a single YAML configuration defined by a human. - Schema Validation: Ensures requests match capabilities. - Version History: All changes to the "ask" are tracked (GitOps for Agents).

2. Metadata & Cadence Controls

FMAI manages the "heartbeat" of the data platform. - Cadence Management: Scheduling agent runs (e.g., Hourly, Daily). - Behavior Templates: Defining how agents should react to missing data or schema drift.

3. Agent Governance & Redaction

Before a prompt reaches the Hyperscaler (Bedrock/Vertex):

- PII Redaction: Fields tagged semantic:pii are automatically masked.

- Prompt Logging: Full audit of the input context and the raw model response.

- Cost Controls: Token counters prevent "runaway" agent loops.

4. Simulation Enforcement (The "Sandbox")

Agent-generated code (SQL, Terraform, Python) is never deployed directly. It is first routed to the Simulator, which: - Spins up ephemeral infrastructure. - Injects synthetic data (using FMAI's "Data Factory" behaviors). - Measures Schema Drift and Anomaly Detection.

5. Transparency & Trust Reports

The final deliverable is not just code, but a Trust Report: - Signed Evidence: A PDF/JSON bundle containing the manifest, the simulation logs, and the approval timestamp. - Cost Summary: Exact token cost for that specific feature build.

Orchestration Flow

The following sequence highlights the interaction between the Human Operator, the Governance Layer, and the AI Agent.

sequenceDiagram

participant Ops as Operations Team

participant AG as Agent Gateway

participant HA as Hyperscaler Agent

participant Sim as FMAI Simulator

participant Gov as Governance Log

participant Client as Client Portal

Ops->>AG: 1. Send Manifest (Scope)

AG->>HA: 2. Invoke Agent (Sanitized)

HA-->>AG: 3. Return Pipeline Proposal

AG->>Gov: Log Prompts & Tokens

AG->>Sim: 4. Stage & Simulate

Sim-->>Ops: 5. Drift/Anomaly Results

Ops->>Gov: 6. Approve (or Edit + Diff)

Gov->>Client: 7. Publish Trust Report

Detailed System Components

1. Agent Gateway (The "Firewall for AI")

A lightweight, cloud-native service (Lambda/CloudRun) that wraps Hyperscaler SDKs.

- Role: It acts as the secure boundary for the system.

- Key Function: Enforces redaction tags (security:redact) before data leaves the environment.

- Observability: Logs 100% of prompt/response pairs to an immutable DynamoDB ledger for audit compliance.

2. The Control Centre

The central dashboard for Operations teams. - Simulation Panel: Visualizes real-time data flow, drift metrics, and anomaly scores during the "Proving" phase. - Approval Queue: A "Pull Request" style interface where humans review Agent proposals (SQL/Code). - Correction Loop: Operators can edit agent code directly. FMAI captures the Diff to fine-tune future prompts.

3. Data Factory (Simulator Engine)

The engine behind the simulation. It doesn't just generate random strings; it generates Data Behaviors. - Retail Scenarios: Simulates Point-of-Sale spikes, inventory drift, and customer churn signals. - IoT Scenarios: Simulates sensor failures, thermal runaways, and telemetry gaps. - Healthcare Scenarios: Generates PII-heavy claim updates to test redaction efficiency.

4. Client Delivery Portal

A read-only view for end-stakeholders. - Trust Reports: Access to the cryptographically signed PDFs proving system reliability. - Cost/Burn Dashboards: View project-level AI spend.

Component Readiness

| Component | Status | Notes |

|---|---|---|

| Manifest CLI | ✅ Mature | Schema validation & versioning active. |

| Simulator | ✅ Mature | Formerly "Data Factory"; proving drift flows. |

| Control Centre | 🔄 Updating | Adding new "Agent Governance" panels. |

| Agent Gateway | 🚧 In-Dev | Wrapping Bedrock/Vertex SDKs. |

| Trust Reports | 🚧 In-Dev | PDF/JSON signing pipelines. |

Deployment Models

FMAI is designed for Cloud-Native deployment with a "Bring Your Own Cloud" model:

- AWS Implementation:

- Compute: Lambda (Gateway), EventBridge (Cadence).

- Storage: DynamoDB (Governance Logs), S3 (Trust Reports).

- AI: Amazon Bedrock.

- GCP Implementation:

- Compute: Cloud Functions, Pub/Sub.

- Storage: BigQuery (Telemetry).

- AI: Vertex AI.

Portfolio Relevance

This project demonstrates leadership in Agentic AI Architecture, moving beyond simple "chatbots" to solving the hard problems of Enterprise AI Reliability: - System Design: Event-driven architecture for agent orchestration. - Safety Engineering: Implementing PII guards and cost controls. - Full-Stack Governance: From strict backend logs to transparent frontend "Trust Reports."