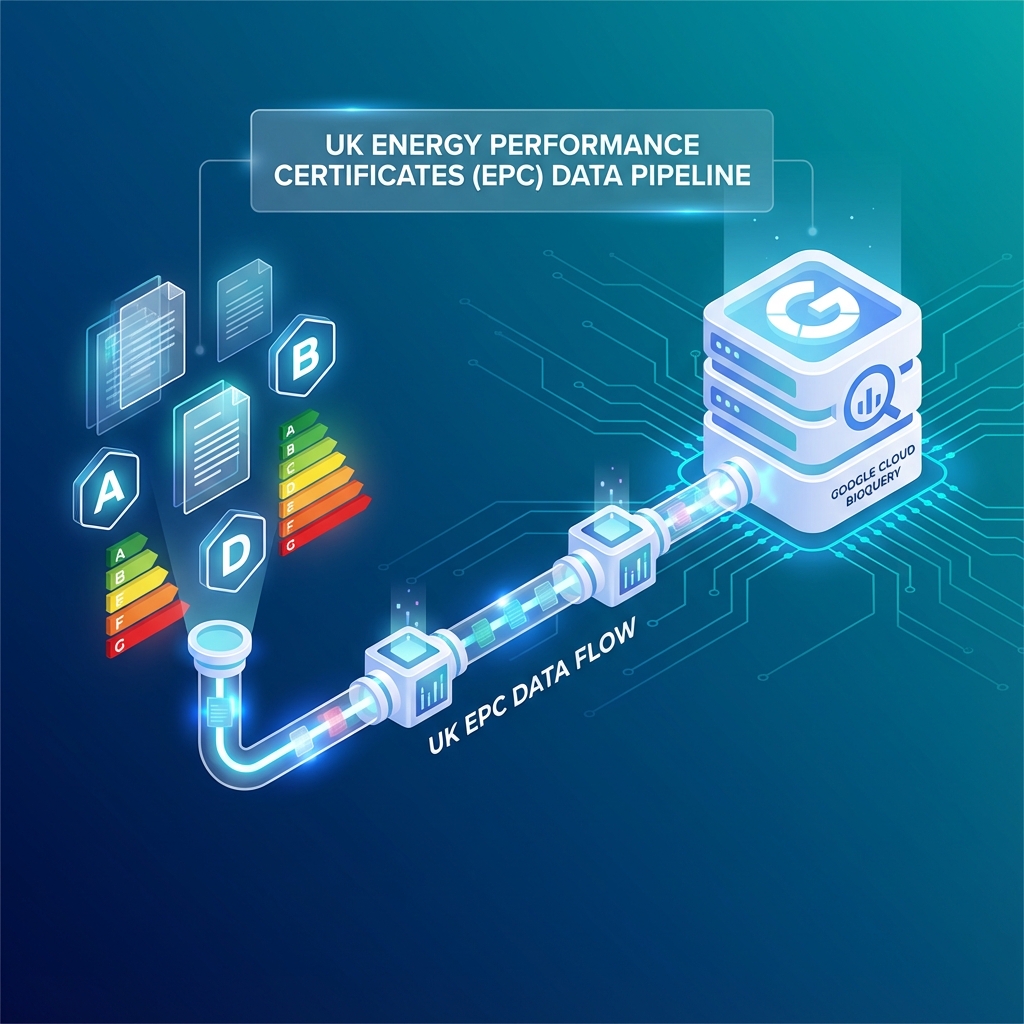

DAME — EPC → BigQuery Ingestion & Enrichment

Industrial‑grade, minimal‑ops pipeline to ingest UK EPC (Energy Performance Certificate) data from the Open Data Communities API, land it as JSON in GCS, and load into BigQuery with curated views ready for analytics and GenAI/RAG.

Why this exists

- JSON in, JSON out: Avoids brittle CSV parsing and schema drift.

- Idempotent: Checkpoints in GCS prevent double work.

- Partitioned & clustered: Cheap scans and fast filters in BigQuery.

- Separation of concerns: HTTP client, loaders, orchestration, SQL views are cleanly split.

- One‑command local run or BigQuery Studio Pipeline (scheduled 08:00 Europe/London).

High‑level architecture

flowchart TD

API["EPC API JSON"] --> Ingest["Ingest Scripts<br>domestic.py | nondomestic.py"]

Ingest -->|Normalize| Data{"Normalized Data"}

subgraph Storage

GCS["GCS Bucket<br>epc/json/..."]

BQ["BigQuery<br>raw_json tables"]

end

Data -->|Load| GCS

Data -->|Load| BQ

subgraph Analytics

Views["Curated Views<br>enr_*_certificates_v"]

Recs["Recommendations"]

end

BQ --> Views

BQ -.-> Recs

Repo layout (key paths)

app/dame_epc/

├─ settings.py # .env + env loader

├─ schema.py # table names, landing schema, view SQL helpers

├─ epc_api.py # HTTP-only EPC client (search-after, retries)

├─ io_utils.py # GCS & BigQuery helpers

├─ logging_setup.py # JSON logging

├─ state.py # GCS checkpoints (is_done/mark_done/clear)

├─ domestic.py # monthly domestic ingest (JSON → GCS → BQ)

├─ nondomestic.py # monthly non-domestic ingest

├─ bulk_recommendations.py # per‑LMK or yearly ZIP recommendations

└─ main.py # orchestrator (loop months/kinds, checkpoints)

cloud/dame-prod-473718/

└─ bigquery/

├─ pipeline/pipeline.md # BQ Studio Pipeline playbook (Prod)

└─ sql/views/ # curated view DDLs (templated with {{PROJECT}}, {{DATASET}})

scripts/

├─ apply_views.py # create/replace all views from SQL files

└─ month_ingest_local.sh # local helper to run orchestrator + apply views

README.md

Prerequisites

- Google Cloud project with BigQuery and GCS enabled.

- EPC API credentials from Open Data Communities.

- gcloud CLI configured with Application Default Credentials (ADC).

Quickstart — one command

chmod +x scripts/month_ingest_local.sh

# Run a month (or range), include recommendations, then apply views

./scripts/month_ingest_local.sh \

--env .env \

--start 2024-01 --end 2024-01 \

--kinds domestic,non-domestic \

--with-recs \

--apply-views

This will: 1. Ingest certs month‑by‑month. 2. Optionally fetch recommendations. 3. Write NDJSON.gz to GCS. 4. Load into partitioned/clustered BigQuery raw tables. 5. Apply curated views.

Data model (landing schema)

| Column | Type | Mode | Notes |

|---|---|---|---|

| lmk_key | STRING | REQUIRED | Certificate LMK (primary key surrogate) |

| lodgement_date | DATE | NULLABLE | Partition field (if present) |

| postcode | STRING | NULLABLE | |

| uprn | STRING | NULLABLE | Treat as string to avoid int pitfalls |

| payload | JSON | NULLABLE | Full source record |